SLAMTEC RPLidar A2M8 / A2M12

This tutorial will guide you through the process of connecting a LiDAR sensor to your Leo Rover.

Light Detection and Ranging devices, or lidars for short, are mechanisms used for mapping the environment, object detection, tracking the speed of vehicles and in a wide range of other applications. In robotics 2D lidars, like A2M8 / A2M12, are used for things such as indoor SLAM (Simultaneous localization and mapping) or safety systems.

What to expect?

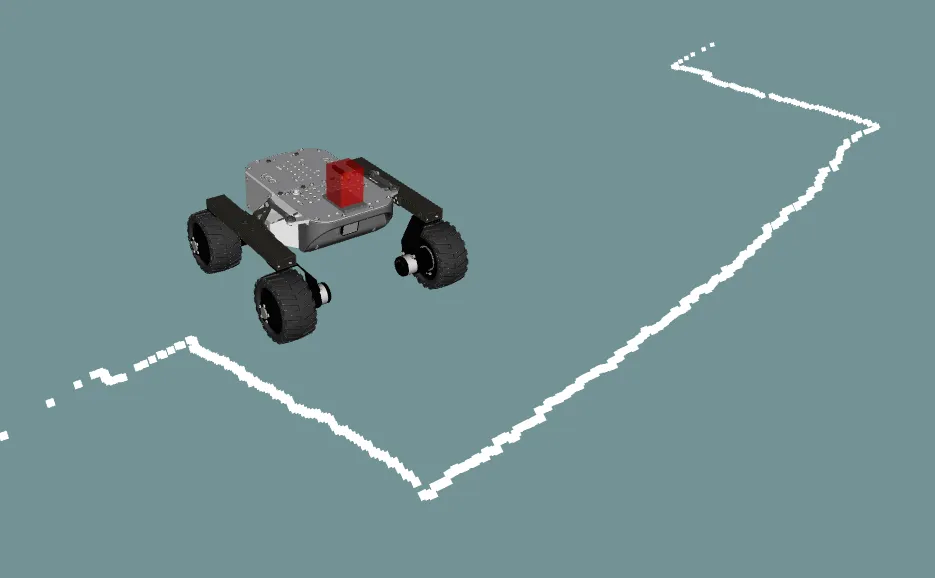

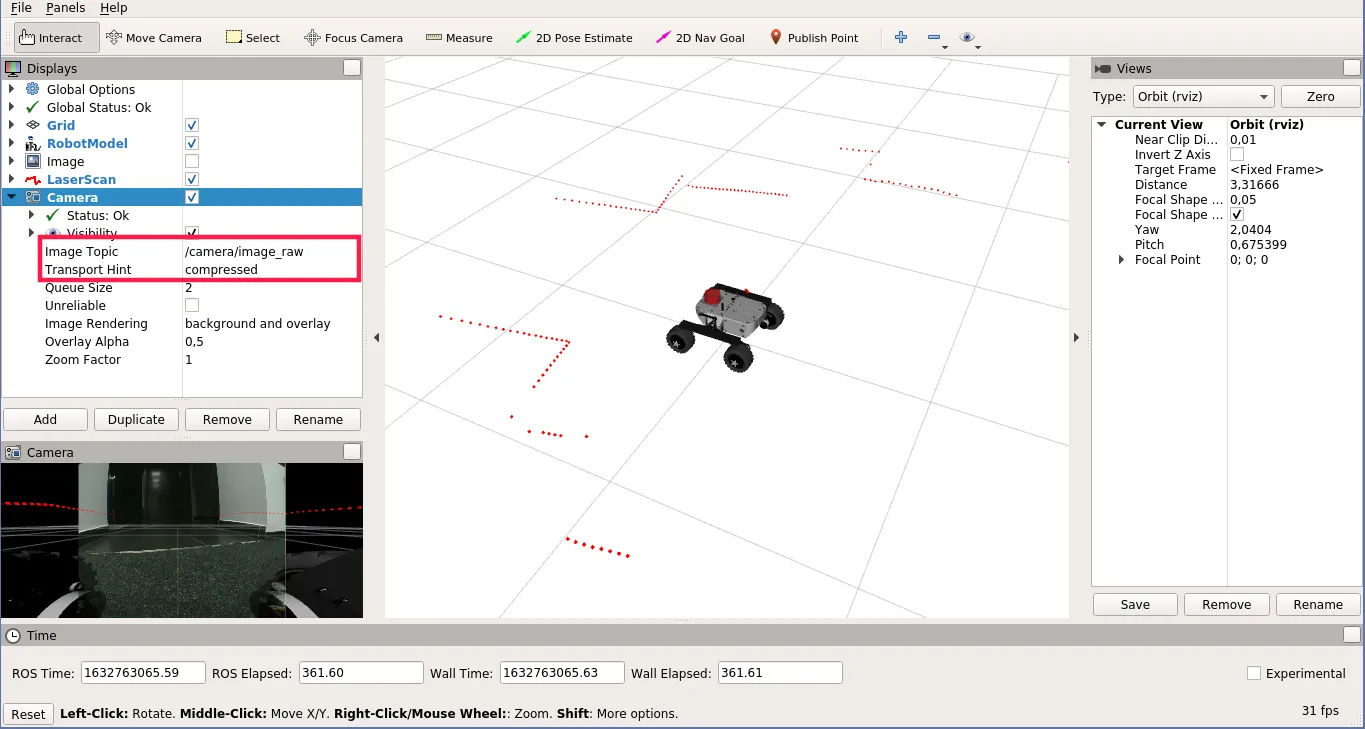

After finishing the tutorial you should be able to both gather the lidar data and visualize it using RViz. Just like in the image below:

Prerequisites

Referenced products

List of components

- RPLidar A2

- RPLidar A2 adapter plate (can be found here: Addon adapters)

- M5x10 Allen head screw x4

- M3x6 Allen head screw x4

Mechanical integration

We developed 3D printable models of mechanical interfaces that allow you to mount the aforementioned sensor to the mounting plate of the rover. Locating the sensor the top of the robot provides a wide field of view with not many obstacles for the laser beam to get caught on. Get the files from here: Addon Adapters.

- With 4 x M3x6 Allen screws connect the sensor to the printed interface plate.

- Use 4 x M5x10 Allen screws to fasten the sensor to the Leo Rover.

Wiring and electronics connection

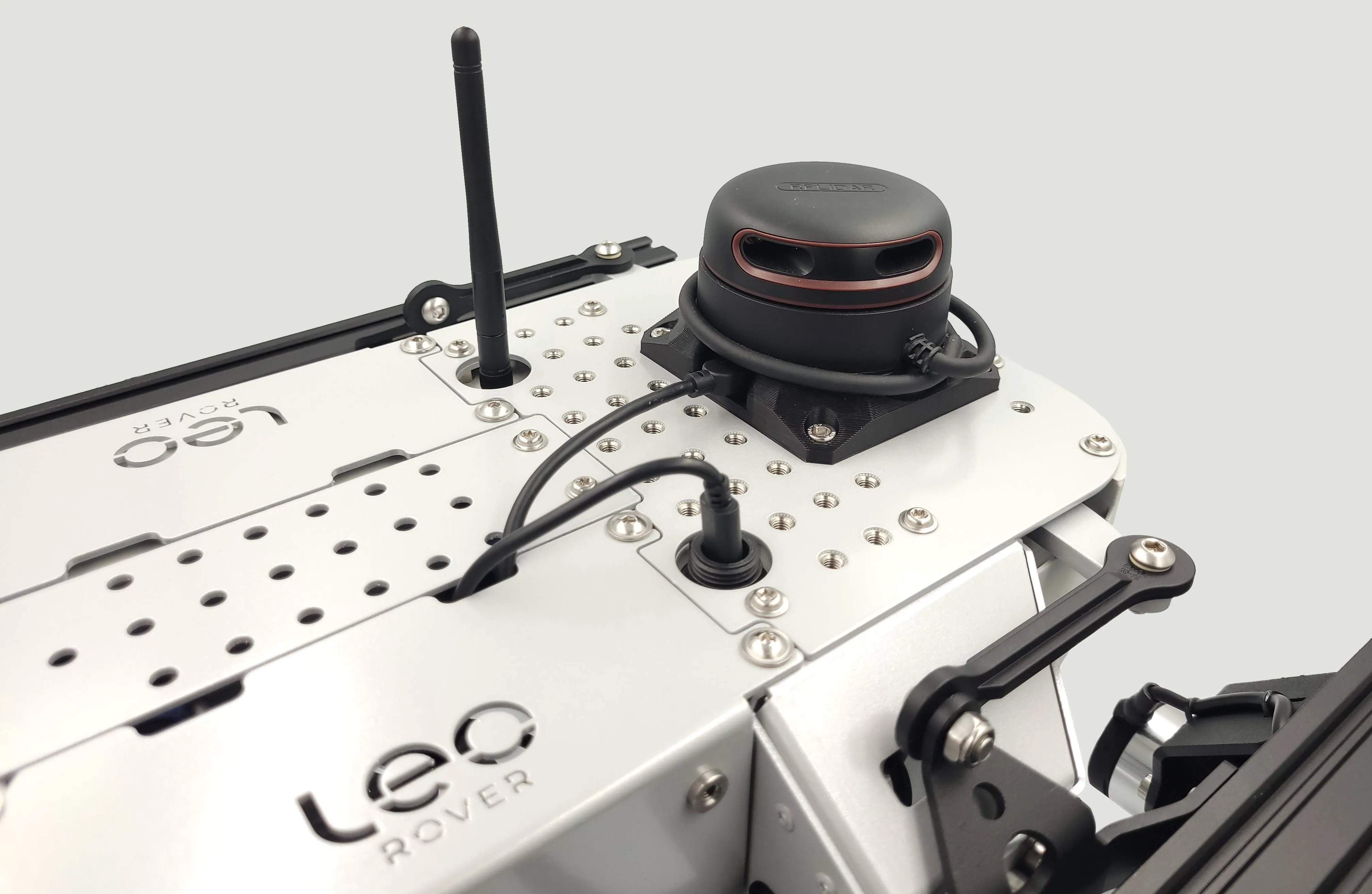

The sensor can be connected to the robot's main computer via the USB socket positioned at the top of the rover. If your USB cable is long it might get into lidars field of view if it sticks up too much. try to hide it in the empty space inside Leo Rovers back frame. Just like on the photo below:

When mounting the sensor, you should be particularly careful not to obstruct the field of view by other parts of the Rover.

The sensor can be connected to the robot's main computer via the USB socket positioned at the top of the rover.

USB connection provides power to the sensor and allows the data transfer. This means that no external power sources are necessary. Some lidars might need external power connections, that's when Powerbox might come in handy.

With everything connected you are ready to try out your new sensor.

Software integration

The first thing you can do is to make sure your device has the correct permissions and is available at the fixed path on your system. To do this, you can add the following rule to the udev service:

KERNEL=="ttyUSB*", ATTRS{idVendor}=="10c4", ATTRS{idProduct}=="ea60", MODE="0666", GROUP="dialout", SYMLINK+="lidar"

Paste these lines to /etc/udev/rules.d/lidar.rules file and reload udev rules

by typing:

sudo udevadm control --reload-rules && sudo udevadm trigger

Your device should now be available at the /dev/lidar path.

We want the sensor functionality to be available in the ROS ecosystem, so you should install a ROS package that provides a node for the sensor you are trying to integrate.

sudo apt install ros-${ROS_DISTRO}-rplidar-ros

Now, create a launch file that would start the node with a fitting configuration.

<launch>

<node name="rplidar_node" pkg="rplidar_ros" type="rplidarNode" output="screen">

<param name="serial_port" value="/dev/lidar"/>

<param name="frame_id" value="laser_frame"/>

</node>

</launch>

Include your launch file in the robot.launch file, so that your node will

start at boot.

In /etc/ros/robot.launch:

<include file="/etc/ros/laser.launch"/>

Your robot should be aware of where the sensor is located and what space it occupies. You can ensure it does that by creating a URDF model of the sensor.

<?xml version="1.0"?>

<robot>

<link name="rplidar_link">

<visual>

<origin xyz="0 0 0.003"/>

<geometry>

<box size="0.079 0.086 0.006"/>

</geometry>

<material name="support">

<color rgba="0.5 0.5 0.5 1.0"/>

</material>

</visual>

<visual>

<origin xyz="0 0 0.023"/>

<geometry>

<cylinder radius="0.038" length="0.04"/>

</geometry>

<material name="lidar">

<color rgba="1.0 0.0 0.0 0.7"/>

</material>

</visual>

<collision>

<origin xyz="0 0 0.003"/>

<geometry>

<box size="0.079 0.086 0.006"/>

</geometry>

</collision>

<collision>

<origin xyz="0 0 0.023"/>

<geometry>

<cylinder radius="0.038" length="0.04"/>

</geometry>

</collision>

</link>

<joint name="rplidar_joint" type="fixed">

<origin xyz="0.0775 0 0"/>

<parent link="base_link"/>

<child link="rplidar_link"/>

</joint>

<link name="laser_frame"/>

<joint name="laser_joint" type="fixed">

<origin xyz="0 0 0.0368" rpy="0 0 ${-pi/2}"/>

<parent link="rplidar_link"/>

<child link="laser_frame"/>

</joint>

</robot>

And including it in the description that is uploaded at boot.

<xacro:include filename="/etc/ros/urdf/laser.urdf.xacro"/>

You can experiment with the URDF file and create a more representative model of the sensor by adding more visual and collision tags or by including meshes in STL or COLLADA format.

The last step is to either reboot the robot or restart the leo service.

sudo systemctl restart leo

Examples

Reading and visualizing the data

The robot should now publish the

LaserScan messages

on the /scan topic. You can check the raw data that it sends by typing:

rostopic echo /scan

If you have ROS installed on your computer, you can get a more graphical representation of the data with RViz. If you don't have ROS, you can follow this guide:

Before starting RViz, make sure you completed the Connecting another computer to ROS network section of ROS Development tutorial:

Now, open RViz by typing rviz in the terminal, or, if you have the leo_viz

package installed, type:

roslaunch leo_viz rviz.launch

This will start RViz with visualization of the current robot model.

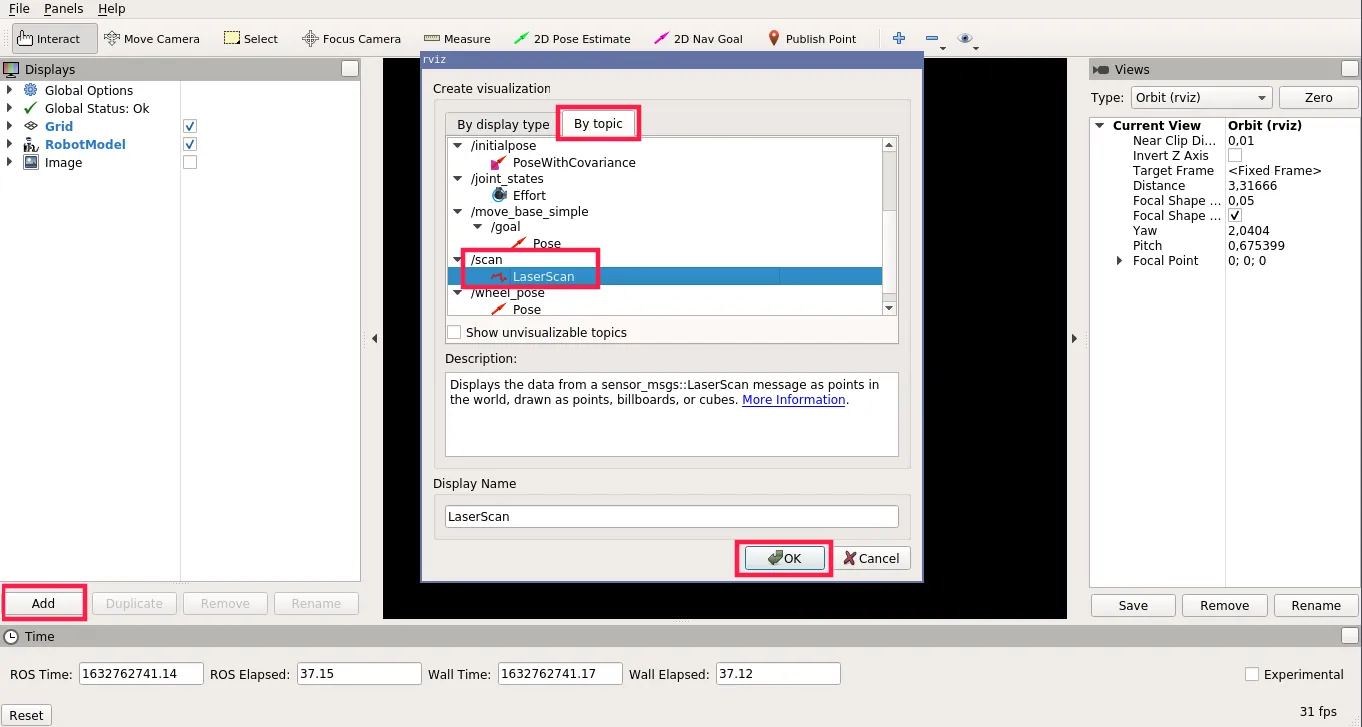

On the Displays panel click Add -> By topic and search for the /scan

topic. Choose the LaserScan display and click Ok.

You should now be able to see the data from the sensor visualized as points in 3D space.

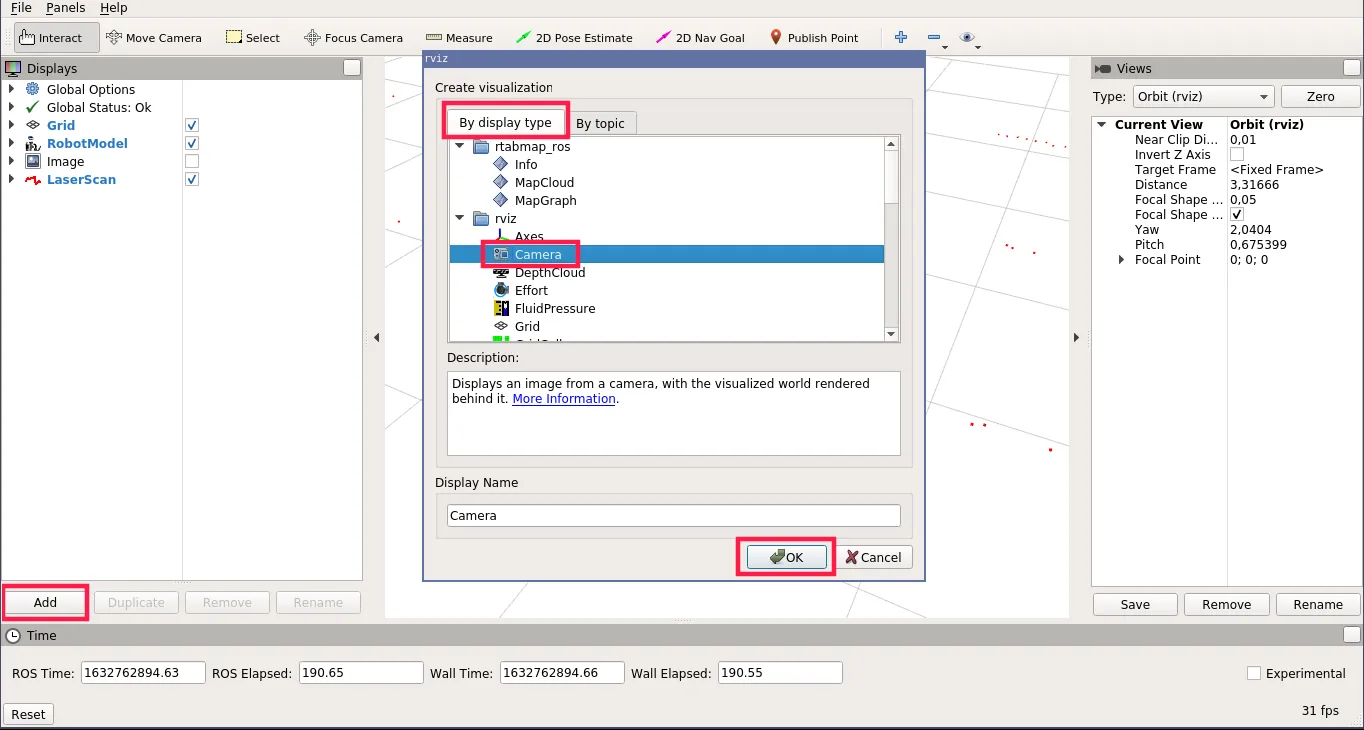

To put the points into the camera image, you can also add the Camera display.

Be sure to check compressed as the Transport Hint and /camera/image_raw as

the Image Topic.

Here's an example end result:

What next?

Lidars are commonly used in projects involving autonomous navigation, you might be interested in a tutorial about it.

They are however, not the only way of teaching a Leo Rover how to move on it's own. Check out our line follower tutorial if you want to learn more. You can also check our Integrations page for more instructions.